Code for America Summit 2024, Day 1

Why states care more about customer experience than ever before (main stage)

Original thread on Mastodon

At CFA Summit and snuck up to the first few rows where there are actually seats, vs the standing-room only in the back. 😅

Re: states being at the forefront of government services, “you gotta be where the people are.” Even more true during COVID!

MN has a North Star of being the best place to raise a child. They’re thinking digital-first to get all the services together.

When working in government services, everyone has to be all-in on customer service. “Listen for the “they’s” when trying to break through red tape and talk through ways of working to get past it.

At State level, it’s so important to get from policy to solution quickly, because that means a family gets to put food on the table. You have to build quickly but also sustainably.

If you encounter a constraint, look into whether the constraint can be removed. “Can we move that fence back so we don’t keep hitting it?” Book rec: “Hack your Bureaucracy” by Marina Nitze and Nick Sinai.

Breaking barriers by building accessible digital services (main stage)

Original thread on Mastodon

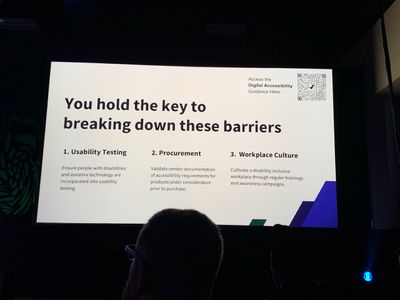

Cassie Winters from OMB is up at the CFA Summit main stage to talk about digital accessibility. 1 in 4 adults in the US live with a disability. But 48% of popular Federal websites have accessibility errors. And there are 60 accessibility errors per page across the top 1 million websites. We have a lot of work to do!

OMB has Digital Accessibility Guidance, but they’re still just words on a page. But people who work in government services hold the key to making services accessible. Do usability tests, validate accessibility vendor work through good procurement, and cultivate a workplace culture where accessibility is paramount.

Exploring AI insights across civic tech (main stage)

Original thread on Mastodon

The big bugaboo in government (and CFA Summit) now is AI. Justin Brown of Center of Public Sector AI says we have to build foundations for AI. Together, we have to set a foundation of ethical, outcome-driven cultures and values.

Traci Walker of the Digital Services Coalition says we need to have more convos with trusted partners and doing more market research before just procuring anything that says “AI.” The AI landscape is full of snake oil salespeople right now!

We can also leverage AI during the procurement process—can AI augment staff capabilities?

You also need good data to have good AI. So any AI-related procurement needs sections about good data too.

Jonathan Porat, CTO of CA State, says to build experimentation into any AI work and test test test!

Sanmi Koyej of Stanford says humans make decisions slowly, but AI tech can make decisions quickly, and “reflects back to us what we are.”

Government should actually engage with AI to demystify it and make it human-centered to augment human capabilities instead of replacing people (which he thinks is problematic). We should also build measurement tools to see which tools are good and which ones are bad, so expectations match reality.

In CA, the teams managing the oldest systems (like UNIX), are now also managing AI projects and platforms. The old guard can do cutting edge work too!

When understanding AI ethics, Traci thinks soft skills will be even more important than the tech itself. We can also open up data and information for education using AI.

Human-centered marketing and communication to drive service uptake (main stage)

Original thread on Mastodon

Katie Fiore of NJ’s Office of Innovation talks about marketing government services. The way that most governments talk about their services is antithetical to people’s actual concerns: “Nobody cares!” (Personal note: AMEN!!!!! 🙌)

You have to meet people where they are. When people drop out of college, they aren’t just demotivated, but research also showed they were grieving. Writing marketing toward that helped uptake by more than 100%.

And NJ Office of Innovation has a marketing playbook!

Kara Swisher receives CfA’s Legacy Achievement Award (main stage)

Original thread on Mastodon

LOL Kara Swisher says “I could kill a techie [or] small poodle” with her award. And reminds us that we “bought and paid for the internet” so it should help us, we shouldn’t be beholden to it.

(And also reminds us that she’s still working even though she’s been getting all these lifetime achievement awards. “I might die with Elon Musk on Mars, in 2045…only one of us will make it, and it won’t be him!”)

“It will be the people doing malevolent things with AI who will kill us. Focus on humanity a little more, and less on the technology.” So important to develop a democratic way of creating technology. “Virtuality is taking over reality.” Let’s not be blind to everything that’s happening.

Fireside chat about AI (main stage)

Original thread on Mastodon

Last fireside chat on the CFA Summit main stage this morning is with Dr. Safiya Noble and Khari Johnson.

At first, people thought code was neutral because “it’s just math” but Safiya’s book “Algorithms of Oppression” looked into the results of keyword searches. Asian and Black women were associated with pornography in search engines. People said “that’s what people are searching for” but is that what Asian and Black women are searching for? (We’re people too!)

Government has to be the guardrails for runaway tech, although we don’t have good enforcement mechanisms right now. Maybe the FTC. There has been a lot of deregulation happening, with lots of tax breaks going to some of the largest, most powerful companies in the world. Government needs to hold companies accountable. And Gen Z is not having it, so we might recoup some losses there.

Dr. Safiya Noble is really concerned about AI’s effect on the environment. One recent finding was that one prompt into a generative AI system is the equivalent of pouring 5 cups of water onto the ground. She thinks we’ll look back and say it’s not worth it.

If we’re going to use AI, we should use it to solve real problems, like weather patterns and planned migration. Nobody really wants to talk to an AI when they call their bank!

Dr. Safiya Noble talks about an easy-to-read white paper from the UK government about AI—procurement was a huge part of it. (I THINK it’s this white paper.)

More tech isn’t going to solve all the problems. What we input into tech system can follow us through our lives. We need to work with each other and with systems, and not just rely on technology.

Dr. Safiya Noble hopes that we can embrace our better selves and not go to the worst. She says New Zealand writes policies where the earth is a stakeholder, and how it must stand for 70 years. How can we think long term and not just short term?

Making lemonade: When well-intentioned legislation is at odds with user needs (breakout)

Original thread on Mastodon

At the CFA Summit breakout session with Victoria Kidd from Bloom Works and Abigail Fisher from Colorado Digital Service.

Colorado needed to track “beds” for behavioral health services. They interviewed providers on “bed” definitions, their current data inputting experience, and what data they would need to understand to use a bed tracking system. They needed this info to develop a usable bed-tracking solution.

One key insight: Bed tracking alone won’t improve care navigation. It’s not just about beds, but “getting appropriate care to facilitate healing.”

They made a journey map to visualize how CO has an inconsistent system that’s built on…faxes. But you can’t filter with faxes, and you don’t even know if your fax has been seen. You can’t work with data that way.

Second key insight: Providers don’t see the benefits of all their reporting efforts. When staffing is reduced, they’re focused on keeping their doors open, not inputting data to the state! This is an administrative burden crisis.

Third key insight: Reporting requirements don’t fit into provider workflows. Providers need the data to be real-time to be useful! The new system needed to be built to talk to existing systems (APIs!), or find some way to reimburse providers for the extra manual inputting work.

Following legislative parameters was already like threading a needle, but adding reality to it became like threading an even smaller needle! To work with this, they had to pinpoint the spirit of the legislation. Engaging leadership in a phased approach to build clinical+provider-focused features on top of the tracking data.

Another strategy was to balance burden and benefit for the providers. You have to give back when you ask something of someone, especially an overly burdened provider! They proposed creating a provider directory to support care navigation with the bed tracking.

The last strategy was to build for standardization. They encouraged leadership to move beyond 1 law = 1 tech system. They looked at international standards like Open Referrals so they could build in more capacity later.

The thread they chose: not building a “bed tracker” at all, but building in the capability to collect bed data along with a provider directory to make it all actionable and integrated into the care navigation journey.

This pivot required socialization and trust, identifying beliefs central to the misalignment.

The legislation assumed that the standalone bed tracker WOULD improve care in CO state, so they had to help everyone release that assumption. They had to break mental models, especially since the idea of “bed tracking” had been around for a long time! Better to integrate into a cohesive system.

To socialize the new idea, you have to get people involved! Find out where they got to where they are so you can work with them to move forward. This requires time! It helped that the research was done in phases.

Socializing new ideas also requires having hard conversations. You have to walk through the legislative ideals from all points of view (state needs vs provider needs) so you can see the discrepancies and talk through them. You have to get the right people in the room at the right time, and keep each other updated on the convos that are happening.

Victoria and Abigail remind us that these hard conversations are HARD. They felt AWFUL when they were happening, but slowly, people’s minds started changing.

And they’re launching Client Care Search this week!!!!

🔥 insight from the Q&A: Another assumption from the legislation is that there are enough beds in CO, you just need to find them. But that’s not true—there’s just not enough beds! Or sometimes there’s not enough staff, because even if you have a bed, it can’t be used if there isn’t staff to support the patient! This is where data can be used in legislation—finding out how reality works. (Personal note: this is how I feel about affordable housing…)

When working with stakeholders on this pivoted solution, they emphasized that they were working in phases. So it wasn’t a “no,” they’d simply be doing the thing later.

Delivering more value by democratizing research with care (breakout)

Original thread on Mastodon

Cheering on my former SF Digital Services team members for the following CFA Summit breakouts! Currently it’s Nadine Levin and Jennifer Ng about democratizing research.

Many researchers who fled academia can be defensive about their research space, but lack of resources often result in non-researchers doing research. But is this a good thing?

Several questions that come up when democratizing research: What IS research? Does rigor and quality matter? What counts as relevant expertise?

In the research community, there are fears about job security and narrowing down to only tactical design research (assumed to be “safe” or “low-impact” which is not necessarily true!).

When you do/frame/present research poorly, there are risks to the project, the research practice, organization, and community.

When democratizing research, you also need to consider how to empower stakeholders in other ways, like asking why the org wants to research, and how research can help the org.

SFDS’s past model involved non-researchers doing all the research and having a third-party non-profit handle the recruitment, before Nadine and Jennifer looked to update the process.

Nadine and Jennifer’s backgrounds gave them a certain POV about research, coming from a large research team and being a research team of one, respectively.

Is “just talking to people (actually coworkers)” research? This might result in data bias—not hearing directly from users, and imprecise observations can be encoded as research. It can also be costly to do research when following best practices, using past research, and using metrics can suffice.

You have to encourage thoughtful decision-making, defining research while not discouraging stakeholders from talking to people.

The monthly rolling research model (with non-researchers using templates) resulted in lots of good data. But without an expert researcher to guide the synthesis, the findings were limited in impact and the conclusions were sometimes inaccurate. And there was pressure in “doing research” every month instead of focusing on impactful insights.

When Nadine and Jennifer joined the team, they redefined the researcher role to evaluate the research need. They also looked at how to involve stakeholders.

When doing research with marginalized communities (ex: monolingual speakers), you have to be sensitive to their needs for safety and comfort, and being aware of the power dynamics. Someone doing research could do real harm if they haven’t had training, like trauma-informed research.

For doing research with marginalized communities, Nadine and Jennifer created templates for participant reach out and discussion guides. And they had to talk it out with stakeholders, who were used to sending anyone who spoke Spanish out to do research with monolingual Spanish speakers.

When democratizing research, you have to understand the needs of your organization and staff. Is it about capacity building or transparency? For SFDS, it was about transparency and getting involved in the data collection.

While democratizing research, it’s important to demonstrate best research practices, for the team and for the stakeholders. You have to have those hard convos to build trust. This is especially important when talking about risk. Research considerations should be framed around risk.

You can empower stakeholders by having a researcher guide the team through messy data if a team lacks time to do research and there is lots of risk involved.

A researcher could also coach the team to do research, if they’re subject matter experts, to build research capacity on lower-risk work.

When Jennifer was a researcher guide, she checked in with stakeholders constantly, asked about their experience, and showed clips. That helped embody research practices before she wrote the report. It helped build in research thinking, but it took a long time—6 months full-time. Sometimes stakeholders would get to a knee-jerk insight from an observation, but Jennifer was there to guide them through it.

Nadine calls up SFDS project manager Amy Martin to talk about her experience getting coached on working on a qualitative survey! This also took a long time—more than 6 months—but really appreciated getting the support. The more Amy got into research, the more she realized what she didn’t know about research, but that’s ok if you expect that.

Nadine assures us that Amy is underselling the impact of her qualitative survey, and emphasizes the importance of doing rigorous, impactful research even if it takes more time. (She also now calls this “collaborative research” instead of “democratizing research.”)

From Q&A: In government, there isn’t an institutional review board like there is in academia. 😅 So that actually puts more responsibility on the researchers to ensure everything is done ethically and safely.

Attendee: “It sounds like you ARE the IRB!”

Nadine: “Yeah, but I don’t want to do that!”

From Q&A: When educating people about how feedback/surveys are not necessarily research, step back from the “how” and get to the “why.” It takes time, but you have to build that trust!

More than an afterthought: The necessity of training government workers on technology (breakout)

Original thread on Mastodon

In the front row again for a former SF Digital Services teammate, this time Amy Martin presenting with Caitlin Seifritz at City of Philadelphia about training non-techies in government! Fun fact: they are both former librarians and most importantly, both have small dogs!

In SF, they’ve worked website training from live webinars to self-paced, with direct access to the website. In Philly, they have limited access to the website system during training.

But in all government, there’s a gap between what traditional government workers and digital service teams know about technology. Not knowing how to connect the gap often causes projects to fail due to communication errors. Training is a way to bridge this gap and build relationships.

Amy and Caitlin wanted to know how other digital service teams did training, so they created a qualitative survey with 25 questions, that got 24 responses. “Qualitative” meaning it did not assess trends or compile numeric statistics, hoping to get deep with fewer responses. (It would have been really hard with hundreds of responses!)

Training survey findings!

Common training topics:

- Plain language

- Web accessibility

- User-centered websites

General tips:

- Interactivity

- 1:1 help as follow-up like office hours

- Small-group cohorts

- Having someone coordinating trainings from big-picture perspective

Both learners and digital service teams struggle to make training a priority. Everyone is busy and has limited capacity! But some orgs also didn’t prioritize the importance of the trainings and focus on real learning.

Very few teams are doing training evaluations! Teams didn’t want to put pressure on learners, but it’s hard to know if a learner actually learned anything. Plus, training alone doesn’t lead to culture change. You can teach people things, but if there’s no opportunities to use them, there’s still no impact.

Digital service teams are attempting to overcome the tech knowledge gap by training partners. But low investment keeps it from working. When the goal is reducing digital service team workload, the focus isn’t on the learner experience.

Expectations are training are high—digital service teams want training to change EVERYTHING. It doesn’t, “but we really want it to!” The incentives have to change too.

But we also don’t know what real training success looks like. The outcomes are unclear, so it’s hard to know what’s working and what’s not working.

You have to understand what training CAN’T do. It can’t lead to culture change, serve as the only solution, be one-size-fits-all, nor can it replace a full educational background and career. Find a happy medium where learners know why a skill is important, and how to apply it in their job. It may take more than one session!

There’s a lot of talk about hiring more techies from private sector to government, but it’s still important to train non-techies. We have to be able to trust each other and talk to each other! That helps us accept the work that we’re doing on both sides.

What training CAN do: build relationships, create advocates, empower learners, foster a culture of learning, inspire curiosity, and build incremental skills. Meet people where they are and inspire them to apply what they’ve learned.

Training recommendations

For leaders of digital service teams:

- Standard practices for measuring (and using) learning outcomes

- Open source curriculum package for plain language, service delivery, and user-centered design

- Making the case toolkit to help teams get buy-in from leadership on HCD concepts on websites

Recommedations for trainers

Make it interactive, especially when you can make it relevant to them! When making small cohorts, try getting people to work together, connect learners after the training, and having a cohort from a single agency to work on a relevant project.

Consider having a formal training program instead of one-off lessons. Define goals, offer 1:1 help (office hours and labs), and create online documentation.

Make the commitment to have a training coordinator to set goals, track metrics, adjust trainings, and encourage iteration. “Things to glow on, and things to grow on.”

Amy notes that in tech, we expect people to come to office hours with accurately-formed questions. As a former librarian, she’s used to people asking vague unprepared questions—a reference desk model. Let’s try that when working with non-techies. Remind ourselves that “learning is inconvenient.”

In the Q&A, an attendee suggests not just calling it training, but move toward “training, learning, and adoption” so it’s built more into the org culture.

Amy and Caitlin also shared their slides because they are awesome!